Google Just Launched Gemini, Its Long-Awaited Answer to ChatGPT

Google says there are three versions of Gemini: Ultra, the largest and most capable; Nano, which is significantly smaller and more efficient; and Pro, of medium size and middling capabilities.

From today, Google’s Bard, a chatbot similar to ChatGPT, will be powered by Gemini Pro, a change the company says will make it capable of more advanced reasoning and planning. Today, a specialized version of Gemini Pro is being folded into a new version of AlphaCode, a “research product” generative tool for coding from Google DeepMind. The most powerful version of Gemini, Ultra, will be put inside Bard and made available through a cloud API in 2024.

Sissy Hsiao, vice president at Google and general manager for Bard, says the model’s multimodal capabilities have given Bard new skills and made it better at tasks such as summarizing content, brainstorming, writing, and planning. “These are the biggest single quality improvements of Bard since we’ve launched,” Hsiao says.

New Vision

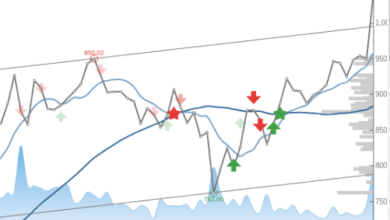

Google showed several demos illustrating Gemini’s ability to handle problems involving visual information. One saw the AI model respond to a video in which someone drew images, created simple puzzles, and asked for game ideas involving a map of the world. Two Google researchers also showed how Gemini can help with scientific research by answering questions about a research paper featuring graphs and equations.

Collins says that Gemini Pro, the model being rolled out this week, outscored the earlier model that initially powered ChatGPT, called GPT-3.5, on six out of eight commonly used benchmarks for testing the smarts of AI software.

Google says Gemini Ultra, the model that will debut next year, scores 90 percent, higher than any other model including GPT-4, on the Massive Multitask Language Understanding (MMLU) benchmark, developed by academic researchers to test language models on questions on topics including math, US history, and law.

“Gemini is state-of-the-art across a wide range of benchmarks—30 out of 32 of the widely used ones in the machine-learning research community,” Collins said. “And so we do see it setting frontiers across the board.”